I recently had the opportunity to work closely with one of the most exciting new datasets in Earth Engine: Google’s Satellite Embeddings, produced by Google DeepMind’s AlphaEarth Foundations model. This is not just another dataset — it represents a new way of working with Earth observation.

Unlike traditional task-specific models, AlphaEarth Foundations is a general-purpose deep learning foundation model. It has been trained on an extraordinary variety of data sources to capture spectral, spatial, temporal, and even climatic context. The result is a set of 64-dimensional embeddings for every pixel on Earth at 10 metre resolution, updated annually from 2017 onwards.

What Do Satellite Embeddings Contain?

The embeddings are vectors — 64 values per pixel — that represent how that place looks when observed through multiple satellites and datasets over the course of an entire year.

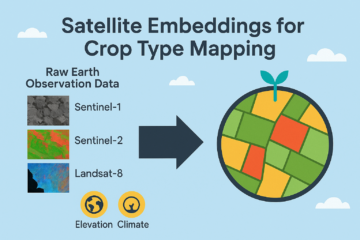

The model was trained with:

- Optical and radar data: Sentinel-1 C-band SAR, Sentinel-2 multispectral, Landsat-8 optical and thermal.

- Biophysical data: GEDI canopy height, ALOS PALSAR-2 radar, and global elevation datasets.

- Geophysical and climate data: ERA5 climate reanalysis, GRACE gravity and mass grids.

- Textual labels: auxiliary information to anchor certain patterns.

Each embedding captures:

- Temporal context – the full year’s worth of observations from all these sensors.

- Spatial context – information from a surrounding neighbourhood of about 1.28 km.

That means two pixels with identical reflectance values but different surroundings (for example, bare soil in a field versus bare soil along a road) will have different embeddings.

How Does It Work?

The model takes the massive input streams of Earth observation data and compresses them into a 64-value vector. Each pixel becomes a point in a 64-dimensional unit sphere:

- Values range between –1 and +1.

- Each vector is unit length, making them easy to compare.

- They cannot be interpreted band by band. All 64 values must be used together.

In Earth Engine, the embeddings are delivered as 64-band images, tiled in 150 × 150 km UTM zones. You can mosaic these tiles for larger regions.

For me, the simplest way to think about embeddings is to compare them with Principal Component Analysis (PCA): PCA reduces the dimensionality of spectral bands, while embeddings reduce an enormous multi-sensor, multi-temporal dataset into 64 values. But unlike PCA, embeddings are learned through deep learning and encode much richer context.

Working with Embeddings in Earth Engine

Using embeddings in Earth Engine feels very familiar. You filter an image collection, mosaic the tiles for your region of interest, and then you are ready to analyse.

Because the dataset has already been processed:

- No cloud masking is required.

- No index calculation is necessary.

- No sensor harmonisation or data fusion is needed.

You are left with a clean, analysis-ready set of features.

What Can You Do With Them?

1. Unsupervised Clustering

By sampling pixels and grouping them into clusters, embeddings can reveal land cover types with remarkably clean boundaries. The spatial context built into the vectors removes the salt-and-pepper noise common in pixel-based classification.

2. Crop Type Mapping

Crop mapping is normally one of the hardest problems in remote sensing, requiring time-series modelling, sensor fusion, and climate response analysis. Embeddings simplify this drastically:

- Phenology is already encoded.

- Both radar and optical signals are integrated.

- ERA5 climate data is included.

With only a crop mask and aggregate crop statistics (for example, acreage per county), embeddings allow me to separate corn from soybean in Iowa with surprising accuracy — even without field labels.

3. Surface Water Detection

Water detection can be approached by clustering embeddings and then identifying which cluster corresponds to water using an index such as NDWI. This “water detect” method yields clear water boundaries and can capture even small water bodies.

4. Supervised Classification

For supervised workflows, embeddings act as a drop-in replacement for raw bands. A k-Nearest Neighbour (kNN) classifier works particularly well, since embeddings are unit vectors in a defined space. With just a handful of high-quality samples, I can map mangroves versus water versus other land covers more accurately than with traditional inputs.

5. Regression

When predicting continuous values (like biomass or crop yields), embeddings can be paired with a Random Forest regressor. Here, embeddings replace the usual stack of spectral indices and ancillary datasets.

6. Object Detection via Similarity Search

This is perhaps the most exciting application. Because embeddings are vectors, I can compare them using cosine similarity.

- Select a pixel over an object of interest (a brick kiln, solar farm, or grain silo).

- Compute similarity between that vector and every other pixel.

- Pixels with high similarity scores point to other instances of the same object.

This means I can detect objects across large regions without training a deep learning model or purchasing expensive high-resolution imagery.

7. Change Detection

By comparing embeddings from two different years, it becomes possible to quantify changes — whether in land cover, water extent, cropping dynamics, or disturbances such as wildfires.

Why This Matters

For me, the value of satellite embeddings is clear:

- No preprocessing: cloud masking, atmospheric correction, temporal modelling, and sensor fusion are already handled.

- Compact and efficient: 64 values per pixel replace terabytes of raw imagery.

- Flexible: useful for clustering, classification, regression, and similarity search.

- Accessible: available directly in Earth Engine with no need for GPUs.

- Open licence: free to use for both research and commercial applications. Just need to attribute.

In many ways, this dataset acts like a virtual satellite, capturing the essence of multiple sensors and data streams in one compact representation.

Closing Thoughts

Working with Google DeepMind’s AlphaEarth Foundations model through the Satellite Embeddings dataset feels like a turning point. Instead of wrestling with raw imagery, cloud masks, and sensor differences, I can focus on solving real problems: mapping crops, detecting water, monitoring change, or even locating individual objects.

It is analysis-ready, globally consistent, and designed to make downstream Earth observation tasks more accessible. I believe embeddings will become a cornerstone for future geospatial applications — bridging the gap between AI foundation models and practical remote sensing.

1 Comment

Crop Type Mapping with Google’s Satellite Embeddings - TETRASENSING · 5 September 2025 at 16:59

[…] a new path. Instead of working with raw imagery and pre-processing pipelines, users can now access 64-dimensional embeddings at 10 m resolution that already […]